Dr. Yoav Bergner

Dr. Yoav Bergner

Title: What science educators should know about psychometrics

Group Meeting Date & Time: Thursday, February 28, 2013 @ 2:00 pm

View the slides from Dr. Bergner’s Group Meeting

Yoav Bergner earned his Ph.D. in theoretical physics at MIT before becoming a sculptor and furniture-maker for five years, a public school science teacher for three years, and subsequently an education researcher. Dr. Bergner is currently a postdoc in Professor David Pritchard’s RELATE (Research in Learning, Assessment, and Tutoring Effectively) group at MIT, analyzing data from online learning environments like edX with techniques from educational data mining and psychometrics. In his group meeting, titled “What every science educator should know about psychometrics”, Dr. Bergner gave an enlightening and in-depth overview of the field of psychometrics designed to aid science educators in their development of effective educational assessments.

Dr. Bergner started his group meeting by giving a brief overview of psychometrics as quantitative measurements of psychological phenomena that is informed by many fields, including statistics, psychology, psychophysics, cognitive science and computer science. A major component of psychometrics is the development of accurate and valid testing strategies, with educational testing being the focus of Dr. Bergner’s talk. Dr. Bergner referred to Evidence Centered Design (Mislevy, Steinberg and Almond, 2003) as a guiding principle for education researchers to think from the beginning about the desired inferences when framing the assessment and analysis strategy.

Dr. Bergner’s talk focused on four topic areas, which he emphasized were not intended to be exhaustive: measurement and constructs, reliability and validity, graphs, and item response theory, and he provided some historical references (provided at the end of this group meeting summary).

The first component of psychometrics discussed was the interplay between measurement and constructs, where constructs are simply the attribute that is assumed to be reflected in an assessment, or in other words, what we think we are measuring. Examples of constructs are extremely diverse and can include scholastic aptitude, critical thinking and also personality characteristics such as extroversion. As educators, we try to assess people’s particular attributes and apply quantitative values to those constructs through the act of measurement. It is important to keep in mind however, that a particular measure that is used only applies in the context of a given construct. Despite the desire to apply quantitative measures to a particular construct, there is almost always an area that is open to interpretation. Determining the specific construct that needs to be assessed will enable the researcher to start thinking about how the construct can be measured.

Two additional components of psychometrics, reliability and validity, should be kept in mind when constructing assessments. Reliability is the quantitative measure of test consistency, similar to measurement precision. An example of a reliability measure that would be relevant if students were given oral exams by individual professors would be inter-rater reliability, for example Cohen’s kappa statistic, which measures the extent to which two assessors agree on the measure of a particular construct, in particular above the chance probability of agreement. Not only is reliability important to keep in mind, but also validity, or the actual interpretation of the scores with respect to the construct. Assessments can have construct invalidity when constructs are too narrow or too broad rendering the ability to interpret the results inaccurate.

Dr. Bergner demonstrated several examples of the use of graphs as visual representations of measurement models. On a test, each construct may be assessed by several questions. There may only be one construct or several factors. A model expresses the likelihood that a student will answer a particular question correctly. A dynamic Bayesian network can be used to model a student’s changing state of learning as a student progresses through a particular course.

The last component of psychometrics that Dr. Bergner presented was item response theory, which represents and analyzes the interactions between a student and a particular test item. Item response theory is more accurate than classical test theory, which represents only the interactions between a student and the test as a whole. Additionally, item response theory measures students’ abilities and the test questions on the same scale. Item response theory can form the basis of an analysis of problems within a test, including the difficulty level and “discrimination” of each particular question. Importantly, item response theory can be used to determine questions that function poorly and should be removed.

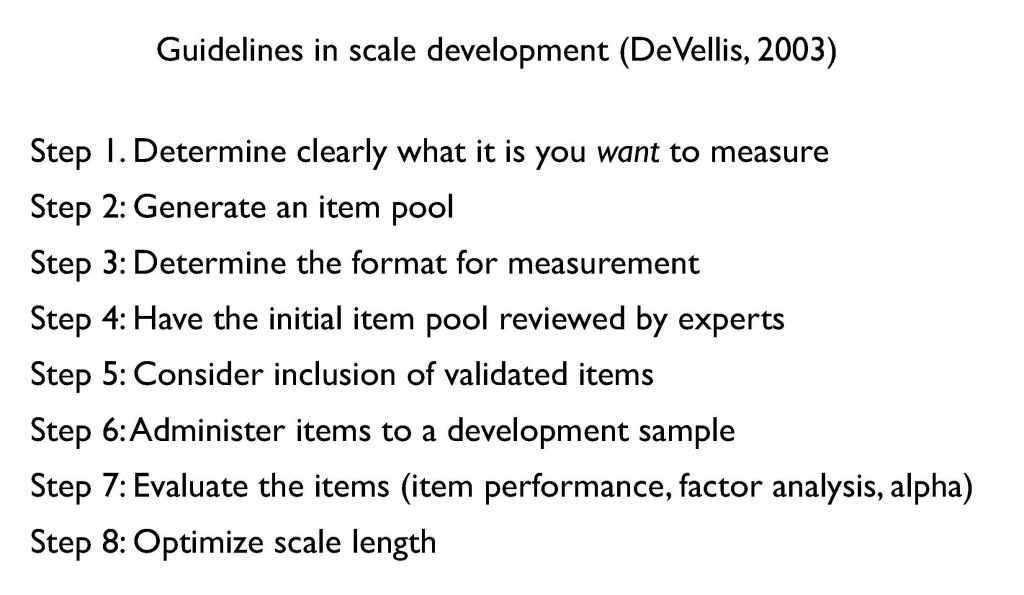

At the end of his talk, Dr. Bergner presented an 8 step framework for creating a properly designed assessment instrument that utilizes all of the components of “scale development” (DeVellis, 2003). The 8 steps detail a process of progressively generating and evaluating an assessment instrument.

References

Cronbach, LJ, PE Meehl. (1955) Construct validity in psychological tests. Psychological Bulletin 52: 281-302.

DeVellis, RF. (2003) Scale Development: Theory and Applications, Chapel Hill. (SAGE Publications Inc.)

Messick, S. (1995) Validity of psychological assessment. American Psychologist 50(9): 741-749.

Stevens, SS. (1946) On the theory of scales of measurement. Science 103(2684): 677-680.